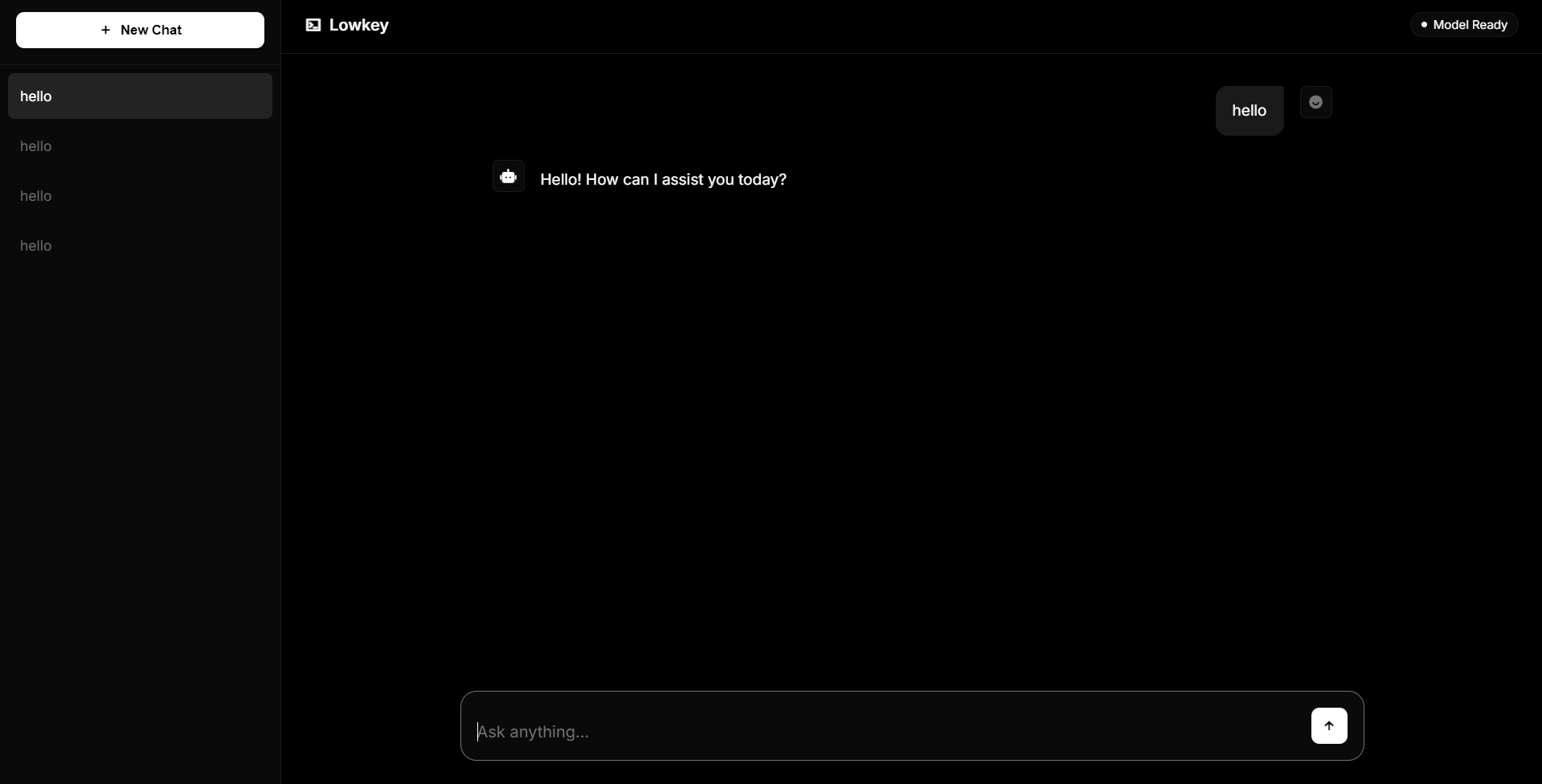

Private. Offline. Fast.

Lowkey is a local-first AI chat app that runs entirely on your machine. No cloud. No tracking. No bullshit.

Runs fully offline using local models like Qwen and Gemma.

Local-First

Runs models directly on your hardware

Offline by Default

No internet required after setup

Streaming Responses

Tokens stream instantly, zero lag

Open Model Support

Qwen, Gemma, LLaMA-GGUF

No Accounts

Just install and use

Cross-Platform

Windows & Linux

Why Local AI?

- Your data never leaves your machine

- No subscriptions

- Predictable performance

- Works even without internet

Frequently Asked Questions

General

What is Lowkey?

Lowkey is an offline, local-first AI chat application that runs entirely on your computer using local language models.

Is Lowkey free to use?

Yes. Lowkey is completely free and does not require subscriptions or accounts.

Does Lowkey require internet?

Only for the initial model download. After setup, Lowkey works fully offline.

Privacy & Security

Does Lowkey send my data to the cloud?

No. All conversations stay on your machine. No data is uploaded, logged, or tracked.

Is any analytics or telemetry collected?

No analytics, no tracking, no telemetry. Your usage stays private.

Where are chats stored?

Chats are stored locally on your device inside the app’s data directory.

Models & Performance

Which AI models does Lowkey support?

Lowkey supports GGUF models such as Qwen, Gemma, and other llama.cpp compatible models.

Can I use my own models?

Yes. You can place your own GGUF models in the models directory.

Does Lowkey stream responses?

Yes. Responses are streamed token-by-token for a fast, responsive experience.

System Requirements

Do I need a GPU?

No. Lowkey works on CPU, but GPU acceleration is supported if available.

What are the minimum requirements?

Honestly? Your potato PC is also cool.

Open Source

Is Lowkey open source?

Yes. Lowkey is open source and available on GitHub.

Can I contribute?

Man do it, I'd love some help! Check out the GitHub repo for contribution guidelines.