In the current landscape of Artificial Intelligence, we are often told that bigger is better. We are pushed toward massive cloud-based models that require a constant internet connection, monthly subscriptions, and a willingness to hand over our data to third-party servers.

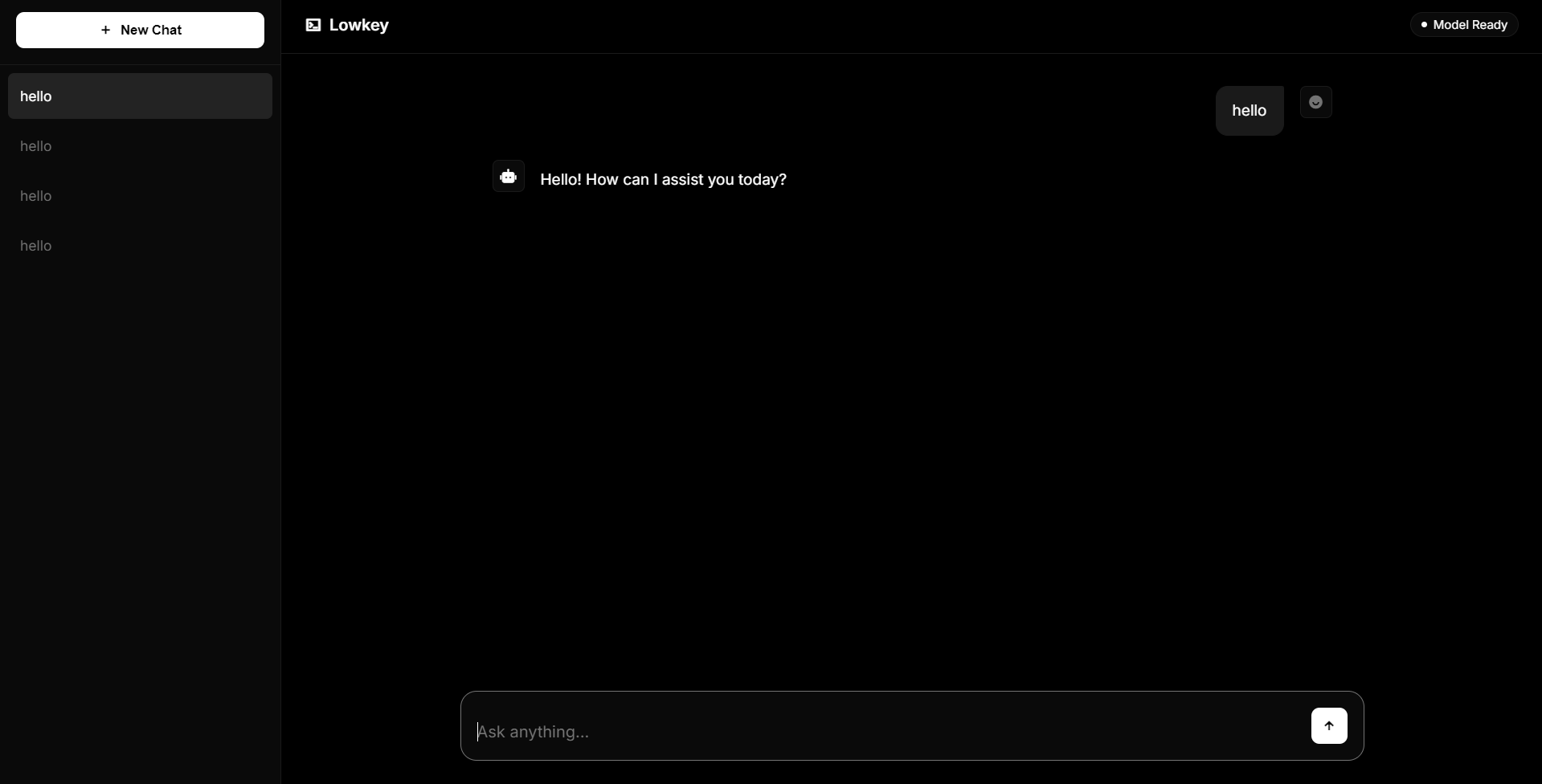

I wanted to build something different. I wanted an app that was insanely fast, completely private, and worked wherever I was—regardless of whether I had a Wi-Fi signal. That project is Lowkey, a dedicated offline chat application designed specifically for the Linux and Windows communities.

The Vision: Offline, Private, and Fast

The goal for Lowkey was simple: create a tool that feels like a native part of your operating system rather than a bloated web wrapper. For many users, the primary barrier to local AI is hardware or complexity. Lowkey removes those barriers.

By focusing on local inference, Lowkey ensures that your conversations never leave your machine. Whether you are a developer drafting code or a writer brainstorming ideas, your intellectual property stays exactly where it belongs—with you.

The Tech Stack: Bridging Platforms with Electron

To make Lowkey accessible to both Linux and Windows enthusiasts, I chose Electron technology. While Electron sometimes gets a reputation for being resource-heavy, I’ve approached the implementation with a "performance-first" mindset.

I’ve optimized the backend to ensure a native-feeling UI. The app doesn't just run; it glides. It responds to keyboard shortcuts instantly and handles the lifecycle of the local model efficiently in the background, ensuring that your system resources aren't drained when the app is idle.

Why Qwen 0.5B?

The most critical decision in developing Lowkey was selecting the base model. I chose Qwen 0.5B. At first glance, 500 million parameters might seem "small" compared to the giants of the industry, but for a local chat app, this is a strategic advantage.

1. Zero Latency

The speed of Qwen 0.5B is genuinely staggering. Tokens are generated almost faster than the human eye can track. There is no "thinking" delay; the response begins the moment you hit enter.

2. Hardware Inclusivity

I wanted Lowkey to run smoothly on everything from high-end workstations to "potato" laptops. Because Qwen 0.5B has a tiny memory footprint, it doesn't require a dedicated high-end GPU. It breathes new life into older hardware, making AI accessible to everyone.

3. Purpose-Built Intelligence

While a 0.5B model might not solve complex multi-step physics equations, it is incredibly proficient at text transformation, basic coding assistance, and general drafting. For daily tasks, speed often beats sheer parameter count.

[Image showing a comparison chart of LLM parameter counts and their corresponding RAM requirements]

Open Source and User Freedom

Lowkey is built on the principle of open source. I believe that the future of AI should be transparent and customizable.

The app serves as a lightweight shell that empowers the user. While it ships with Qwen 0.5B for that out-of-the-box speed, the architecture is designed to be modular. If you have a beast of a machine with 64GB of RAM and a powerful GPU, you aren't locked in. You can swap the base model for a Llama 3, Mistral, or any other model that fits your specific requirements.

Beyond the Cloud

Lowkey is more than just a chat app; it’s a rejection of the "AI-as-a-Service" monopoly. It proves that you don't need a massive data center to have a helpful assistant. You just need smart code, an efficient model, and a focus on the user experience.

Whether you're looking for a distraction-free writing environment or a quick offline coding companion, Lowkey is designed to stay out of your way and just work.